DAST API analyzer (ULTIMATE)

DAST API analyzer became the default analyzer for on-demand DAST API scans in GitLab 15.6.

Perform Dynamic Application Security Testing (DAST) of web APIs to help discover bugs and potential security issues that other QA processes may miss. Use DAST API tests in addition to other GitLab Secure security scanners and your own test processes. You can run DAST API tests either as part your CI/CD workflow, on-demand, or both.

WARNING: Do not run DAST API testing against a production server. Not only can it perform any function that the API can, it may also trigger bugs in the API. This includes actions like modifying and deleting data. Only run DAST API against a test server.

DAST API can test the following web API types:

- REST API

- SOAP

- GraphQL

- Form bodies, JSON, or XML

When DAST API scans run

When run in your CI/CD pipeline, DAST API scanning runs in the dast stage by default. To ensure

DAST API scanning examines the latest code, ensure your CI/CD pipeline deploys changes to a test

environment in a stage before the dast stage.

If your pipeline is configured to deploy to the same web server on each run, running a pipeline while another is still running could cause a race condition in which one pipeline overwrites the code from another. The API to be scanned should be excluded from changes for the duration of a DAST API scan. The only changes to the API should be from the DAST API scanner. Changes made to the API (for example, by users, scheduled tasks, database changes, code changes, other pipelines, or other scanners) during a scan could cause inaccurate results.

Example DAST API scanning configurations

The following projects demonstrate DAST API scanning:

- Example OpenAPI v2 Specification project

- Example HTTP Archive (HAR) project

- Example Postman Collection project

- Example GraphQL project

- Example SOAP project

- Authentication Token using Selenium

Targeting API for DAST scanning

You can specify the API you want to scan by using:

OpenAPI Specification

- Support for OpenAPI Specification using YAML format was introduced in GitLab 14.0.

- Support to generate media type

application/xmlwas introduced in GitLab 14.8.- Support to media types was introduced in GitLab 14.10.

The OpenAPI Specification (formerly the Swagger Specification) is an API description format for REST APIs. This section shows you how to configure DAST API scanning using an OpenAPI Specification to provide information about the target API to test. OpenAPI Specifications are provided as a file system resource or URL. Both JSON and YAML OpenAPI formats are supported.

DAST API uses an OpenAPI document to generate the request body. When a request body is required, the body generation is limited to these body types:

application/x-www-form-urlencodedmultipart/form-dataapplication/jsonapplication/xml

OpenAPI and media types

A media type (formerly known as MIME type) is an identifier for file formats and format contents transmitted. A OpenAPI document lets you specify that a given operation can accept different media types, hence a given request can send data using different file content. As for example, a PUT /user operation to update user data could accept data in either XML (media type application/xml) or JSON (media type application/json) format.

OpenAPI 2.x lets you specify the accepted media types globally or per operation, and OpenAPI 3.x lets you specify the accepted media types per operation. DAST API will check the listed media types, and try to produce sample data for each supported media type.

- In GitLab 14.10 and later, the default behavior is to select one of the supported media types to use. The first supported media type is chosen from the list. This behavior is configurable.

- In GitLab 14.9 and earlier, the default behavior is to perform testing using all supported media types. This means if two media types are listed (for example,

application/jsonandapplication/xml), testing are performed using JSON, and then the same tests using XML.

Testing the same operation (for example, POST /user) using different media types (for example, application/json and application/xml) is not always desirable.

For example, if the target application executes the same code regardless of the request content type, it will take longer to finish the test session, and it may report duplicated vulnerabilities related to the request body depending on the target app.

The environment variable DAST_API_OPENAPI_ALL_MEDIA_TYPES lets you specify whether or not to use all supported media types instead of one when generating requests for a given operation. When the environment variable DAST_API_OPENAPI_ALL_MEDIA_TYPES is set to any value, DAST API tries to generate requests for all supported media types instead of one in a given operation. This will cause testing to take longer as testing is repeated for each provided media type.

Alternatively, the variable DAST_API_OPENAPI_MEDIA_TYPES is used to provide a list of media types that will each be tested. Providing more than one media type causes testing to take longer, as testing is performed for each media type selected. When the environment variable DAST_API_OPENAPI_MEDIA_TYPES is set to a list of media types, only the listed media types are included when creating requests.

Multiple media types in DAST_API_OPENAPI_MEDIA_TYPES are separated by a colon (:). For example, to limit request generation to the media types application/x-www-form-urlencoded and multipart/form-data, set the environment variable DAST_API_OPENAPI_MEDIA_TYPES to application/x-www-form-urlencoded:multipart/form-data. Only supported media types in this list are included when creating requests, though non-supported media types are always skipped. A media type text may contain different sections. For example, application/vnd.api+json; charset=UTF-8, is a compound of type "/" [tree "."] subtype ["+" suffix]* [";" parameter]. Parameters are not taken into account when performing the filtering media types on request generation.

The environment variables DAST_API_OPENAPI_ALL_MEDIA_TYPES and DAST_API_OPENAPI_MEDIA_TYPES allow you to decide how to handle media types. These settings are mutually exclusive. If both are enabled, DAST API reports an error.

Configure DAST API with an OpenAPI Specification

To configure DAST API scanning with an OpenAPI Specification:

-

Include the

DAST-API.gitlab-ci.ymltemplate in your.gitlab-ci.ymlfile. -

The configuration file has several testing profiles defined with different checks enabled. We recommend that you start with the

Quickprofile. Testing with this profile completes faster, allowing for easier configuration validation. Provide the profile by adding theDAST_API_PROFILECI/CD variable to your.gitlab-ci.ymlfile. -

Provide the location of the OpenAPI Specification as either a file or URL. Specify the location by adding the

DAST_API_OPENAPIvariable. -

The target API instance's base URL is also required. Provide it by using the

DAST_API_TARGET_URLvariable or anenvironment_url.txtfile.Adding the URL in an

environment_url.txtfile at your project's root is great for testing in dynamic environments. To run DAST API against an app dynamically created during a GitLab CI/CD pipeline, have the app persist its URL in anenvironment_url.txtfile. DAST API automatically parses that file to find its scan target. You can see an example of this in our Auto DevOps CI YAML.

Complete example configuration of using an OpenAPI Specification:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/This is a minimal configuration for DAST API. From here you can:

- Run your first scan.

- Add authentication.

- Learn how to handle false positives.

HTTP Archive (HAR)

The HTTP Archive format (HAR) is an archive file format for logging HTTP transactions. When used with the GitLab DAST API scanner, the HAR file must contain records of calling the web API to test. The DAST API scanner extracts all of the requests and uses them to perform testing.

You can use various tools to generate HAR files:

- Insomnia Core: API client

- Chrome: Browser

- Firefox: Browser

- Fiddler: Web debugging proxy

- GitLab HAR Recorder: Command line

WARNING: HAR files may contain sensitive information such as authentication tokens, API keys, and session cookies. We recommend that you review the HAR file contents before adding them to a repository.

DAST API scanning with a HAR file

To configure DAST API to use a HAR file that provides information about the target API to test:

-

Include the

DAST-API.gitlab-ci.ymltemplate in your.gitlab-ci.ymlfile. -

The configuration file has several testing profiles defined with different checks enabled. We recommend that you start with the

Quickprofile. Testing with this profile completes faster, allowing for easier configuration validation.Provide the profile by adding the

DAST_API_PROFILECI/CD variable to your.gitlab-ci.ymlfile. -

Provide the location of the HAR file. You can provide the location as a file path or URL. URL support was introduced in GitLab 13.10 and later. Specify the location by adding the

DAST_API_HARvariable. -

The target API instance's base URL is also required. Provide it by using the

DAST_API_TARGET_URLvariable or anenvironment_url.txtfile.Adding the URL in an

environment_url.txtfile at your project's root is great for testing in dynamic environments. To run DAST API against an app dynamically created during a GitLab CI/CD pipeline, have the app persist its URL in anenvironment_url.txtfile. DAST API automatically parses that file to find its scan target. You can see an example of this in our Auto DevOps CI YAML.

Complete example configuration of using an HAR file:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_HAR: test-api-recording.har

DAST_API_TARGET_URL: http://test-deployment/This example is a minimal configuration for DAST API. From here you can:

- Run your first scan.

- Add authentication.

- Learn how to handle false positives.

GraphQL Schema

Support for GraphQL Schema was introduced in GitLab 15.4.

GraphQL is a query language for your API and an alternative to REST APIs. DAST API supports testing GraphQL endpoints multiple ways:

- Test using the GraphQL Schema. Introduced in GitLab 15.4.

- Test using a recording (HAR) of GraphQL queries.

- Test using a Postman Collection containing GraphQL queries.

This section documents how to test using a GraphQL schema. The GraphQL schema support in DAST API is able to query the schema from endpoints that support introspection. Introspection is enabled by default to allow tools like GraphiQL to work. For details on how to enable introspection, see your GraphQL framework documentation.

DAST API scanning with a GraphQL endpoint URL

The GraphQL support in DAST API is able to query a GraphQL endpoint for the schema.

NOTE: The GraphQL endpoint must support introspection queries for this method to work correctly.

To configure DAST API to use a GraphQL endpoint URL that provides information about the target API to test:

-

Include the

DAST-API.gitlab-ci.ymltemplate in your.gitlab-ci.ymlfile. -

Provide the path to the GraphQL endpoint, for example

/api/graphql. Specify the location by adding theDAST_API_GRAPHQLvariable. -

The target API instance's base URL is also required. Provide it by using the

DAST_API_TARGET_URLvariable or anenvironment_url.txtfile.Adding the URL in an

environment_url.txtfile at your project's root is great for testing in dynamic environments. See the dynamic environment solutions section of our documentation for more information.

Complete example configuration of using a GraphQL endpoint path:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

dast_api:

variables:

DAST_API_GRAPHQL: /api/graphql

DAST_API_TARGET_URL: http://test-deployment/This example is a minimal configuration for DAST API. From here you can:

- Run your first scan.

- Add authentication.

- Learn how to handle false positives.

DAST API scanning with a GraphQL Schema file

DAST API can use a GraphQL schema file to understand and test a GraphQL endpoint that has introspection disabled. To use a GraphQL schema file, it must be in the introspection JSON format. A GraphQL schema can be converted to a the introspection JSON format using an online 3rd party tool: https://transform.tools/graphql-to-introspection-json.

To configure DAST API to use a GraphQL schema file that provides information about the target API to test:

-

Include the

DAST-API.gitlab-ci.ymltemplate in your.gitlab-ci.ymlfile. -

Provide the GraphQL endpoint path, for example

/api/graphql. Specify the path by adding theDAST_API_GRAPHQLvariable. -

Provide the location of the GraphQL schema file. You can provide the location as a file path or URL. Specify the location by adding the

DAST_API_GRAPHQL_SCHEMAvariable. -

The target API instance's base URL is also required. Provide it by using the

DAST_API_TARGET_URLvariable or anenvironment_url.txtfile.Adding the URL in an

environment_url.txtfile at your project's root is great for testing in dynamic environments. See the dynamic environment solutions section of our documentation for more information.

Complete example configuration of using an GraphQL schema file:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

dast_api:

variables:

DAST_API_GRAPHQL: /api/graphql

DAST_API_GRAPHQL_SCHEMA: test-api-graphql.schema

DAST_API_TARGET_URL: http://test-deployment/Complete example configuration of using an GraphQL schema file URL:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

dast_api:

variables:

DAST_API_GRAPHQL: /api/graphql

DAST_API_GRAPHQL_SCHEMA: http://file-store/files/test-api-graphql.schema

DAST_API_TARGET_URL: http://test-deployment/This example is a minimal configuration for DAST API. From here you can:

- Run your first scan.

- Add authentication.

- Learn how to handle false positives.

Postman Collection

The Postman API Client is a popular tool that developers and testers use to call various types of APIs. The API definitions can be exported as a Postman Collection file for use with DAST API. When exporting, make sure to select a supported version of Postman Collection: v2.0 or v2.1.

When used with the GitLab DAST API scanner, Postman Collections must contain definitions of the web API to test with valid data. The DAST API scanner extracts all the API definitions and uses them to perform testing.

WARNING: Postman Collection files may contain sensitive information such as authentication tokens, API keys, and session cookies. We recommend that you review the Postman Collection file contents before adding them to a repository.

DAST API scanning with a Postman Collection file

To configure DAST API to use a Postman Collection file that provides information about the target API to test:

-

The configuration file has several testing profiles defined with different checks enabled. We recommend that you start with the

Quickprofile. Testing with this profile completes faster, allowing for easier configuration validation.Provide the profile by adding the

DAST_API_PROFILECI/CD variable to your.gitlab-ci.ymlfile. -

Provide the location of the Postman Collection file as either a file or URL. Specify the location by adding the

DAST_API_POSTMAN_COLLECTIONvariable. -

The target API instance's base URL is also required. Provide it by using the

DAST_API_TARGET_URLvariable or anenvironment_url.txtfile.Adding the URL in an

environment_url.txtfile at your project's root is great for testing in dynamic environments. To run DAST API against an app dynamically created during a GitLab CI/CD pipeline, have the app persist its URL in anenvironment_url.txtfile. DAST API automatically parses that file to find its scan target. You can see an example of this in our Auto DevOps CI YAML.

Complete example configuration of using a Postman collection:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection_serviceA.json

DAST_API_TARGET_URL: http://test-deployment/This is a minimal configuration for DAST API. From here you can:

- Run your first scan.

- Add authentication.

- Learn how to handle false positives.

Postman variables

- Support for Postman Environment file format was introduced in GitLab 15.1.

- Support for multiple variable files was introduced in GitLab 15.1.

- Support for Postman variable scopes: Global and Environment was introduced in GitLab 15.1.

Variables in Postman Client

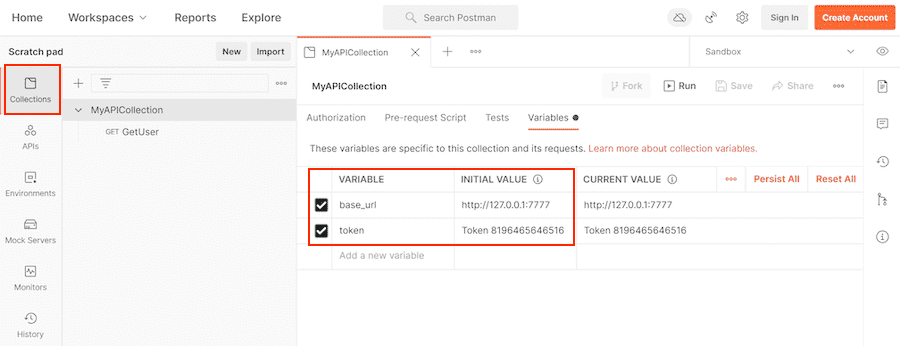

Postman allows the developer to define placeholders that can be used in different parts of the requests. These placeholders are called variables, as explained in using variables. You can use variables to store and reuse values in your requests and scripts. For example, you can edit the collection to add variables to the document:

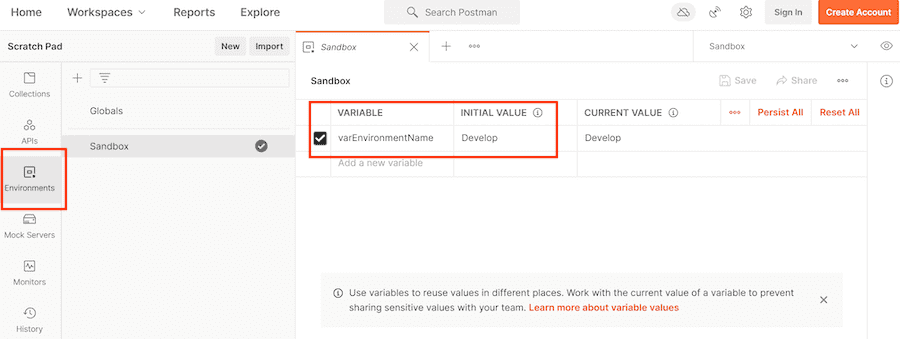

Or alternatively, you can add variables in an environment:

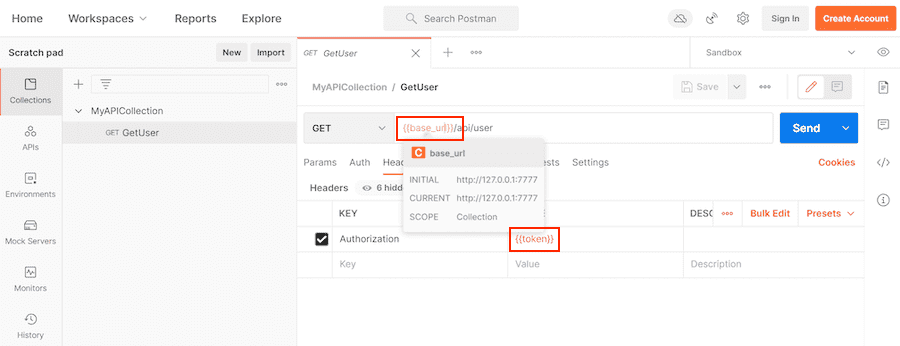

You can then use the variables in sections such as URL, headers, and others:

Postman has grown from a basic client tool with a nice UX experience to a more complex ecosystem that allows testing APIs with scripts, creating complex collections that trigger secondary requests, and setting variables along the way. Not every feature in the Postman ecosystem is supported. For example, scripts are not supported. The main focus of the Postman support is to ingest Postman Collection definitions that are used by the Postman Client and their related variables defined in the workspace, environments, and the collections themselves.

Postman allows creating variables in different scopes. Each scope has a different level of visibility in the Postman tools. For example, you can create a variable in a global environment scope that is seen by every operation definition and workspace. You can also create a variable in a specific environment scope that is only visible and used when that specific environment is selected for use. Some scopes are not always available, for example in the Postman ecosystem you can create requests in the Postman Client, these requests do not have a local scope, but test scripts do.

Variable scopes in Postman can be a daunting topic and not everyone is familiar with it. We strongly recommend that you read Variable Scopes from Postman documentation before moving forward.

As mentioned above, there are different variable scopes, and each of them has a purpose and can be used to provide more flexibility to your Postman document. There is an important note on how values for variables are computed, as per Postman documentation:

If a variable with the same name is declared in two different scopes, the value stored in the variable with narrowest scope is used. For example, if there is a global variable named

usernameand a local variable namedusername, the local value is used when the request runs.

The following is a summary of the variable scopes supported by the Postman Client and DAST API:

- Global Environment (Global) scope is a special pre-defined environment that is available throughout a workspace. We can also refer to the global environment scope as the global scope. The Postman Client allows exporting the global environment into a JSON file, which can be used with DAST API.

- Environment scope is a named group of variables created by a user in the Postman Client. The Postman Client supports a single active environment along with the global environment. The variables defined in an active user-created environment take precedence over variables defined in the global environment. The Postman Client allows exporting your environment into a JSON file, which can be used with DAST API.

- Collection scope is a group of variables declared in a given collection. The collection variables are available to the collection where they have been declared and the nested requests or collections. Variables defined in the collection scope take precedence over the global environment scope and also the environment scope. The Postman Client can export one or more collections into a JSON file, this JSON file contains selected collections, requests, and collection variables.

-

DAST API Scope is a new scope added by DAST API to allow users to provide extra variables, or override variables defined in other supported scopes. This scope is not supported by Postman. The DAST API Scope variables are provided using a custom JSON file format.

- Override values defined in the environment or collection

- Defining variables from scripts

- Define a single row of data from the unsupported data scope

- Data scope is a group of variables in which their name and values come from JSON or CSV files. A Postman collection runner like Newman or Postman Collection Runner executes the requests in a collection as many times as entries have the JSON or CSV file. A good use case for these variables is to automate tests using scripts in Postman. DAST API does not support reading data from a CSV or JSON file.

- Local scope are variables that are defined in Postman scripts. DAST API does not support Postman scripts and by extension, variables defined in scripts. You can still provide values for the script-defined variables by defining them in one of the supported scopes, or our custom JSON format.

Not all scopes are supported by DAST API and variables defined in scripts are not supported. The following table is sorted by broadest scope to narrowest scope.

| Scope | Postman | DAST API | Comment |

|---|---|---|---|

| Global Environment | Yes | Yes | Special pre-defined environment |

| Environment | Yes | Yes | Named environments |

| Collection | Yes | Yes | Defined in your postman collection |

| DAST API Scope | No | Yes | Custom scope added by DAST API |

| Data | Yes | No | External files in CSV or JSON format |

| Local | Yes | No | Variables defined in scripts |

For more details on how to define variables and export variables in different scopes, see:

Exporting from Postman Client

The Postman Client lets you export different file formats, for instance, you can export a Postman collection or a Postman environment. The exported environment can be the global environment (which is always available) or can be any custom environment you previously have created. When you export a Postman Collection, it may contain only declarations for collection and local scoped variables; environment scoped variables are not included.

To get the declaration for environment scoped variables, you have to export a given environment at the time. Each exported file only includes variables from the selected environment.

For more details on exporting variables in different supported scopes, see:

DAST API Scope, custom JSON file format

Our custom JSON file format is a JSON object where each object property represents a variable name and the property value represents the variable value. This file can be created using your favorite text editor, or it can be produced by an earlier job in your pipeline.

This example defines two variables base_url and token in the DAST API scope:

{

"base_url": "http://127.0.0.1/",

"token": "Token 84816165151"

}Using scopes with DAST API

The scopes: global, environment, collection, and GitLab DAST API are supported in GitLab 15.1 and later. GitLab 15.0 and earlier, supports only the collection, and GitLab DAST API scopes.

The following table provides a quick reference for mapping scope files/URLs to DAST API configuration variables:

| Scope | How to Provide |

|---|---|

| Global Environment | DAST_API_POSTMAN_COLLECTION_VARIABLES |

| Environment | DAST_API_POSTMAN_COLLECTION_VARIABLES |

| Collection | DAST_API_POSTMAN_COLLECTION |

| DAST API Scope | DAST_API_POSTMAN_COLLECTION_VARIABLES |

| Data | Not supported |

| Local | Not supported |

The Postman Collection document automatically includes any collection scoped variables. The Postman Collection is provided with the configuration variable DAST_API_POSTMAN_COLLECTION. This variable can be set to a single exported Postman collection.

Variables from other scopes are provided through the DAST_API_POSTMAN_COLLECTION_VARIABLES configuration variable. The configuration variable supports a comma (,) delimited file list in GitLab 15.1 and later. GitLab 15.0 and earlier, supports only one single file. The order of the files provided is not important as the files provide the needed scope information.

The configuration variable DAST_API_POSTMAN_COLLECTION_VARIABLES can be set to:

Undefined Postman variables

There is a chance that DAST API engine does not find all variables references that your Postman collection file is using. Some cases can be:

- You are using data or local scoped variables, and as stated previously these scopes are not supported by DAST API. Thus, assuming the values for these variables have not been provided through the DAST API scope, then the values of the data and local scoped variables are undefined.

- A variable name was typed incorrectly, and the name does not match the defined variable.

- Postman Client supports a new dynamic variable that is not supported by DAST API.

When possible, DAST API follows the same behavior as the Postman Client does when dealing with undefined variables. The text of the variable reference remains the same, and there is no text substitution. The same behavior also applies to any unsupported dynamic variables.

For example, if a request definition in the Postman Collection references the variable {{full_url}} and the variable is not found it is left unchanged with the value {{full_url}}.

Dynamic Postman variables

In addition to variables that a user can define at various scope levels, Postman has a set of pre-defined variables called dynamic variables. The dynamic variables are already defined and their name is prefixed with a dollar sign ($), for instance, $guid. Dynamic variables can be used like any other variable, and in the Postman Client, they produce random values during the request/collection run.

An important difference between DAST API and Postman is that DAST API returns the same value for each usage of the same dynamic variables. This differs from the Postman Client behavior which returns a random value on each use of the same dynamic variable. In other words, DAST API uses static values for dynamic variables while Postman uses random values.

The supported dynamic variables during the scanning process are:

| Variable | Value |

|---|---|

$guid |

611c2e81-2ccb-42d8-9ddc-2d0bfa65c1b4 |

$isoTimestamp |

2020-06-09T21:10:36.177Z |

$randomAbbreviation |

PCI |

$randomAbstractImage |

http://no-a-valid-host/640/480/abstract |

$randomAdjective |

auxiliary |

$randomAlphaNumeric |

a |

$randomAnimalsImage |

http://no-a-valid-host/640/480/animals |

$randomAvatarImage |

https://no-a-valid-host/path/to/some/image.jpg |

$randomBankAccount |

09454073 |

$randomBankAccountBic |

EZIAUGJ1 |

$randomBankAccountIban |

MU20ZPUN3039684000618086155TKZ |

$randomBankAccountName |

Home Loan Account |

$randomBitcoin |

3VB8JGT7Y4Z63U68KGGKDXMLLH5 |

$randomBoolean |

true |

$randomBs |

killer leverage schemas |

$randomBsAdjective |

viral |

$randomBsBuzz |

repurpose |

$randomBsNoun |

markets |

$randomBusinessImage |

http://no-a-valid-host/640/480/business |

$randomCatchPhrase |

Future-proofed heuristic open architecture |

$randomCatchPhraseAdjective |

Business-focused |

$randomCatchPhraseDescriptor |

bandwidth-monitored |

$randomCatchPhraseNoun |

superstructure |

$randomCatsImage |

http://no-a-valid-host/640/480/cats |

$randomCity |

Spinkahaven |

$randomCityImage |

http://no-a-valid-host/640/480/city |

$randomColor |

fuchsia |

$randomCommonFileExt |

wav |

$randomCommonFileName |

well_modulated.mpg4 |

$randomCommonFileType |

audio |

$randomCompanyName |

Grady LLC |

$randomCompanySuffix |

Inc |

$randomCountry |

Kazakhstan |

$randomCountryCode |

MD |

$randomCreditCardMask |

3622 |

$randomCurrencyCode |

ZMK |

$randomCurrencyName |

Pound Sterling |

$randomCurrencySymbol |

£ |

$randomDatabaseCollation |

utf8_general_ci |

$randomDatabaseColumn |

updatedAt |

$randomDatabaseEngine |

Memory |

$randomDatabaseType |

text |

$randomDateFuture |

Tue Mar 17 2020 13:11:50 GMT+0530 (India Standard Time) |

$randomDatePast |

Sat Mar 02 2019 09:09:26 GMT+0530 (India Standard Time) |

$randomDateRecent |

Tue Jul 09 2019 23:12:37 GMT+0530 (India Standard Time) |

$randomDepartment |

Electronics |

$randomDirectoryPath |

/usr/local/bin |

$randomDomainName |

trevor.info |

$randomDomainSuffix |

org |

$randomDomainWord |

jaden |

$randomEmail |

Iva.Kovacek61@no-a-valid-host.com |

$randomExampleEmail |

non-a-valid-user@example.net |

$randomFashionImage |

http://no-a-valid-host/640/480/fashion |

$randomFileExt |

war |

$randomFileName |

neural_sri_lanka_rupee_gloves.gdoc |

$randomFilePath |

/home/programming_chicken.cpio |

$randomFileType |

application |

$randomFirstName |

Chandler |

$randomFoodImage |

http://no-a-valid-host/640/480/food |

$randomFullName |

Connie Runolfsdottir |

$randomHexColor |

#47594a |

$randomImageDataUri |

data:image/svg+xml;charset=UTF-8,%3Csvg%20xmlns%3D%22http%3A%2F%2Fwww.w3.org%2F2000%2Fsvg%22%20version%3D%221.1%22%20baseProfile%3D%22full%22%20width%3D%22undefined%22%20height%3D%22undefined%22%3E%20%3Crect%20width%3D%22100%25%22%20height%3D%22100%25%22%20fill%3D%22grey%22%2F%3E%20%20%3Ctext%20x%3D%220%22%20y%3D%2220%22%20font-size%3D%2220%22%20text-anchor%3D%22start%22%20fill%3D%22white%22%3Eundefinedxundefined%3C%2Ftext%3E%20%3C%2Fsvg%3E |

$randomImageUrl |

http://no-a-valid-host/640/480 |

$randomIngverb |

navigating |

$randomInt |

494 |

$randomIP |

241.102.234.100 |

$randomIPV6 |

dbe2:7ae6:119b:c161:1560:6dda:3a9b:90a9 |

$randomJobArea |

Mobility |

$randomJobDescriptor |

Senior |

$randomJobTitle |

International Creative Liaison |

$randomJobType |

Supervisor |

$randomLastName |

Schneider |

$randomLatitude |

55.2099 |

$randomLocale |

ny |

$randomLongitude |

40.6609 |

$randomLoremLines |

Ducimus in ut mollitia.\nA itaque non.\nHarum temporibus nihil voluptas.\nIste in sed et nesciunt in quaerat sed. |

$randomLoremParagraph |

Ab aliquid odio iste quo voluptas voluptatem dignissimos velit. Recusandae facilis qui commodi ea magnam enim nostrum quia quis. Nihil est suscipit assumenda ut voluptatem sed. Esse ab voluptas odit qui molestiae. Rem est nesciunt est quis ipsam expedita consequuntur. |

$randomLoremParagraphs |

Voluptatem rem magnam aliquam ab id aut quaerat. Placeat provident possimus voluptatibus dicta velit non aut quasi. Mollitia et aliquam expedita sunt dolores nam consequuntur. Nam dolorum delectus ipsam repudiandae et ipsam ut voluptatum totam. Nobis labore labore recusandae ipsam quo. |

$randomLoremSentence |

Molestias consequuntur nisi non quod. |

$randomLoremSentences |

Et sint voluptas similique iure amet perspiciatis vero sequi atque. Ut porro sit et hic. Neque aspernatur vitae fugiat ut dolore et veritatis. Ab iusto ex delectus animi. Voluptates nisi iusto. Impedit quod quae voluptate qui. |

$randomLoremSlug |

eos-aperiam-accusamus, beatae-id-molestiae, qui-est-repellat |

$randomLoremText |

Quisquam asperiores exercitationem ut ipsum. Aut eius nesciunt. Et reiciendis aut alias eaque. Nihil amet laboriosam pariatur eligendi. Sunt ullam ut sint natus ducimus. Voluptas harum aspernatur soluta rem nam. |

$randomLoremWord |

est |

$randomLoremWords |

vel repellat nobis |

$randomMACAddress |

33:d4:68:5f:b4:c7 |

$randomMimeType |

audio/vnd.vmx.cvsd |

$randomMonth |

February |

$randomNamePrefix |

Dr. |

$randomNameSuffix |

MD |

$randomNatureImage |

http://no-a-valid-host/640/480/nature |

$randomNightlifeImage |

http://no-a-valid-host/640/480/nightlife |

$randomNoun |

bus |

$randomPassword |

t9iXe7COoDKv8k3 |

$randomPeopleImage |

http://no-a-valid-host/640/480/people |

$randomPhoneNumber |

700-008-5275 |

$randomPhoneNumberExt |

27-199-983-3864 |

$randomPhrase |

You can't program the monitor without navigating the mobile XML program! |

$randomPrice |

531.55 |

$randomProduct |

Pizza |

$randomProductAdjective |

Unbranded |

$randomProductMaterial |

Steel |

$randomProductName |

Handmade Concrete Tuna |

$randomProtocol |

https |

$randomSemver |

7.0.5 |

$randomSportsImage |

http://no-a-valid-host/640/480/sports |

$randomStreetAddress |

5742 Harvey Streets |

$randomStreetName |

Kuhic Island |

$randomTransactionType |

payment |

$randomTransportImage |

http://no-a-valid-host/640/480/transport |

$randomUrl |

https://no-a-valid-host.net |

$randomUserAgent |

Mozilla/5.0 (Macintosh; U; Intel Mac OS X 10.9.8; rv:15.6) Gecko/20100101 Firefox/15.6.6 |

$randomUserName |

Jarrell.Gutkowski |

$randomUUID |

6929bb52-3ab2-448a-9796-d6480ecad36b |

$randomVerb |

navigate |

$randomWeekday |

Thursday |

$randomWord |

withdrawal |

$randomWords |

Samoa Synergistic sticky copying Grocery |

$timestamp |

1562757107 |

Example: Global Scope

In this example, the global scope is exported from the Postman Client as global-scope.json and provided to DAST API through the DAST_API_POSTMAN_COLLECTION_VARIABLES configuration variable.

Here is an example of using DAST_API_POSTMAN_COLLECTION_VARIABLES:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_POSTMAN_COLLECTION_VARIABLES: global-scope.json

DAST_API_TARGET_URL: http://test-deployment/Example: Environment Scope

In this example, the environment scope is exported from the Postman Client as environment-scope.json and provided to DAST API through the DAST_API_POSTMAN_COLLECTION_VARIABLES configuration variable.

Here is an example of using DAST_API_POSTMAN_COLLECTION_VARIABLES:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_POSTMAN_COLLECTION_VARIABLES: environment-scope.json

DAST_API_TARGET_URL: http://test-deployment/Example: Collection Scope

The collection scope variables are included in the exported Postman Collection file and provided through the DAST_API_POSTMAN_COLLECTION configuration variable.

Here is an example of using DAST_API_POSTMAN_COLLECTION:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_TARGET_URL: http://test-deployment/Example: DAST API Scope

The DAST API Scope is used for two main purposes, defining data and local scope variables that are not supported by DAST API, and changing the value of an existing variable defined in another scope. The DAST API Scope is provided through the DAST_API_POSTMAN_COLLECTION_VARIABLES configuration variable.

Here is an example of using DAST_API_POSTMAN_COLLECTION_VARIABLES:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_POSTMAN_COLLECTION_VARIABLES: dast-api-scope.json

DAST_API_TARGET_URL: http://test-deployment/The file dast-api-scope.json uses our custom JSON file format. This JSON is an object with key-value pairs for properties. The keys are the variables' names, and the values are the variables'

values. For example:

{

"base_url": "http://127.0.0.1/",

"token": "Token 84816165151"

}Example: Multiple Scopes

In this example, a global scope, environment scope, and collection scope are configured. The first step is to export our various scopes.

-

Export the global scope as

global-scope.json -

Export the environment scope as

environment-scope.json - Export the Postman Collection which includes the collection scope as

postman-collection.json

The Postman Collection is provided using the DAST_API_POSTMAN_COLLECTION variable, while the other scopes are provided using the DAST_API_POSTMAN_COLLECTION_VARIABLES. DAST API can identify which scope the provided files match using data provided in each file.

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_POSTMAN_COLLECTION_VARIABLES: global-scope.json,environment-scope.json

DAST_API_TARGET_URL: http://test-deployment/Example: Changing a Variables Value

When using exported scopes, it's often the case that the value of a variable must be changed for use with DAST API. For example, a collection scoped variable might contain a variable named api_version with a value of v2, while your test needs a value of v1. Instead of modifying the exported collection to change the value, the DAST API scope can be used to change its value. This works because the DAST API scope takes precedence over all other scopes.

The collection scope variables are included in the exported Postman Collection file and provided through the DAST_API_POSTMAN_COLLECTION configuration variable.

The DAST API Scope is provided through the DAST_API_POSTMAN_COLLECTION_VARIABLES configuration variable, but first, we must create the file.

The file dast-api-scope.json uses our custom JSON file format. This JSON is an object with key-value pairs for properties. The keys are the variables' names, and the values are the variables'

values. For example:

{

"api_version": "v1"

}Our CI definition:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_POSTMAN_COLLECTION_VARIABLES: dast-api-scope.json

DAST_API_TARGET_URL: http://test-deployment/Example: Changing a Variables Value with Multiple Scopes

When using exported scopes, it's often the case that the value of a variable must be changed for use with DAST API. For example, an environment scope might contain a variable named api_version with a value of v2, while your test needs a value of v1. Instead of modifying the exported file to change the value, the DAST API scope can be used. This works because the DAST API scope takes precedence over all other scopes.

In this example, a global scope, environment scope, collection scope, and DAST API scope are configured. The first step is to export and create our various scopes.

-

Export the global scope as

global-scope.json -

Export the environment scope as

environment-scope.json - Export the Postman Collection which includes the collection scope as

postman-collection.json

The DAST API scope is used by creating a file dast-api-scope.json using our custom JSON file format. This JSON is an object with key-value pairs for properties. The keys are the variables' names, and the values are the variables'

values. For example:

{

"api_version": "v1"

}The Postman Collection is provided using the DAST_API_POSTMAN_COLLECTION variable, while the other scopes are provided using the DAST_API_POSTMAN_COLLECTION_VARIABLES. DAST API can identify which scope the provided files match using data provided in each file.

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_POSTMAN_COLLECTION: postman-collection.json

DAST_API_POSTMAN_COLLECTION_VARIABLES: global-scope.json,environment-scope.json,dast-api-scope.json

DAST_API_TARGET_URL: http://test-deployment/Authentication

Authentication is handled by providing the authentication token as a header or cookie. You can provide a script that performs an authentication flow or calculates the token.

HTTP Basic Authentication

HTTP basic authentication is an authentication method built into the HTTP protocol and used in conjunction with transport layer security (TLS).

We recommended that you create a CI/CD variable

for the password (for example, TEST_API_PASSWORD), and set it to be masked. You can create CI/CD

variables from the GitLab project's page at Settings > CI/CD, in the Variables section.

Because of the limitations on masked variables,

you should Base64-encode the password before adding it as a variable.

Finally, add two CI/CD variables to your .gitlab-ci.yml file:

-

DAST_API_HTTP_USERNAME: The username for authentication. -

DAST_API_HTTP_PASSWORD_BASE64: The Base64-encoded password for authentication.

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_HAR: test-api-recording.har

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_HTTP_USERNAME: testuser

DAST_API_HTTP_PASSWORD_BASE64: $TEST_API_PASSWORDRaw password

If you do not want to Base64-encode the password (or if you are using GitLab 15.3 or earlier) you can provide the raw password DAST_API_HTTP_PASSWORD, instead of using DAST_API_HTTP_PASSWORD_BASE64.

Bearer tokens

Bearer tokens are used by several different authentication mechanisms, including OAuth2 and JSON Web

Tokens (JWT). Bearer tokens are transmitted using the Authorization HTTP header. To use Bearer

tokens with DAST API, you need one of the following:

- A token that doesn't expire.

- A way to generate a token that lasts the length of testing.

- A Python script that DAST API can call to generate the token.

Token doesn't expire

If the Bearer token doesn't expire, use the DAST_API_OVERRIDES_ENV variable to provide it. This

variable's content is a JSON snippet that provides headers and cookies to add to DAST API's

outgoing HTTP requests.

Follow these steps to provide the Bearer token with DAST_API_OVERRIDES_ENV:

-

Create a CI/CD variable, for example

TEST_API_BEARERAUTH, with the value{"headers":{"Authorization":"Bearer dXNlcm5hbWU6cGFzc3dvcmQ="}}(substitute your token). You can create CI/CD variables from the GitLab projects page at Settings > CI/CD, in the Variables section. Due to the format ofTEST_API_BEARERAUTHit's not possible to mask the variable. To mask the token's value, you can create a second variable with the token values, and defineTEST_API_BEARERAUTHwith the value{"headers":{"Authorization":"Bearer $MASKED_VARIABLE"}}. -

In your

.gitlab-ci.ymlfile, setDAST_API_OVERRIDES_ENVto the variable you just created:stages: - dast include: - template: DAST-API.gitlab-ci.yml variables: DAST_API_PROFILE: Quick DAST_API_OPENAPI: test-api-specification.json DAST_API_TARGET_URL: http://test-deployment/ DAST_API_OVERRIDES_ENV: $TEST_API_BEARERAUTH -

To validate that authentication is working, run a DAST API test and review the job logs and the test API's application logs.

Token generated at test runtime

If the Bearer token must be generated and doesn't expire during testing, you can provide DAST API with a file that has the token. A prior stage and job, or part of the DAST API job, can generate this file.

DAST API expects to receive a JSON file with the following structure:

{

"headers" : {

"Authorization" : "Bearer dXNlcm5hbWU6cGFzc3dvcmQ="

}

}This file can be generated by a prior stage and provided to DAST API through the

DAST_API_OVERRIDES_FILE CI/CD variable.

Set DAST_API_OVERRIDES_FILE in your .gitlab-ci.yml file:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_OVERRIDES_FILE: dast-api-overrides.jsonTo validate that authentication is working, run a DAST API test and review the job logs and the test API's application logs.

Token has short expiration

If the Bearer token must be generated and expires prior to the scan's completion, you can provide a program or script for the DAST API scanner to execute on a provided interval. The provided script runs in an Alpine Linux container that has Python 3 and Bash installed. If the Python script requires additional packages, it must detect this and install the packages at runtime.

The script must create a JSON file containing the Bearer token in a specific format:

{

"headers" : {

"Authorization" : "Bearer dXNlcm5hbWU6cGFzc3dvcmQ="

}

}You must provide three CI/CD variables, each set for correct operation:

-

DAST_API_OVERRIDES_FILE: JSON file the provided command generates. -

DAST_API_OVERRIDES_CMD: Command that generates the JSON file. -

DAST_API_OVERRIDES_INTERVAL: Interval (in seconds) to run command.

For example:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_OVERRIDES_FILE: dast-api-overrides.json

DAST_API_OVERRIDES_CMD: renew_token.py

DAST_API_OVERRIDES_INTERVAL: 300To validate that authentication is working, run an DAST API test and review the job logs and the test API's application logs. See the overrides section for more information about override commands.

Configuration files

To get you started quickly, GitLab provides the configuration file

gitlab-dast-api-config.yml.

This file has several testing profiles that perform various numbers of tests. The run time of each

profile increases as the test numbers go up. To use a configuration file, add it to your

repository's root as .gitlab/gitlab-dast-api-config.yml.

Profiles

The following profiles are pre-defined in the default configuration file. Profiles can be added, removed, and modified by creating a custom configuration.

Passive

- Application Information Check

- Cleartext Authentication Check

- JSON Hijacking Check

- Sensitive Information Check

- Session Cookie Check

Quick

- Application Information Check

- Cleartext Authentication Check

- FrameworkDebugModeCheck

- HTML Injection Check

- Insecure Http Methods Check

- JSON Hijacking Check

- JSON Injection Check

- Sensitive Information Check

- Session Cookie Check

- SQL Injection Check

- Token Check

- XML Injection Check

Full

- Application Information Check

- Cleartext AuthenticationCheck

- CORS Check

- DNS Rebinding Check

- Framework Debug Mode Check

- HTML Injection Check

- Insecure Http Methods Check

- JSON Hijacking Check

- JSON Injection Check

- Open Redirect Check

- Sensitive File Check

- Sensitive Information Check

- Session Cookie Check

- SQL Injection Check

- TLS Configuration Check

- Token Check

- XML Injection Check

Available CI/CD variables

| CI/CD variable | Description |

|---|---|

SECURE_ANALYZERS_PREFIX |

Specify the Docker registry base address from which to download the analyzer. |

DAST_API_VERSION |

Specify DAST API container version. Defaults to 2. |

DAST_API_IMAGE_SUFFIX |

Specify a container image suffix. Defaults to none. |

DAST_API_API_PORT |

Specify the communication port number used by DAST API engine. Defaults to 5500. Introduced in GitLab 15.5. |

DAST_API_TARGET_URL |

Base URL of API testing target. |

DAST_API_CONFIG |

DAST API configuration file. Defaults to .gitlab-dast-api.yml. |

DAST_API_PROFILE |

Configuration profile to use during testing. Defaults to Quick. |

DAST_API_EXCLUDE_PATHS |

Exclude API URL paths from testing. |

DAST_API_EXCLUDE_URLS |

Exclude API URL from testing. Introduced in GitLab 14.10. |

DAST_API_EXCLUDE_PARAMETER_ENV |

JSON string containing excluded parameters. |

DAST_API_EXCLUDE_PARAMETER_FILE |

Path to a JSON file containing excluded parameters. |

DAST_API_REQUEST_HEADERS |

A comma-separated (,) list of headers to include on each scan request. Consider using DAST_API_REQUEST_HEADERS_BASE64 when storing secret header values in a masked variable, which has character set restrictions. |

DAST_API_REQUEST_HEADERS_BASE64 |

A comma-separated (,) list of headers to include on each scan request, Base64-encoded. Introduced in GitLab 15.6. |

DAST_API_OPENAPI |

OpenAPI specification file or URL. |

DAST_API_OPENAPI_RELAXED_VALIDATION |

Relax document validation. Default is disabled. Introduced in GitLab 14.7. |

DAST_API_OPENAPI_ALL_MEDIA_TYPES |

Use all supported media types instead of one when generating requests. Causes test duration to be longer. Default is disabled. Introduced in GitLab 14.10. |

DAST_API_OPENAPI_MEDIA_TYPES |

Colon (:) separated media types accepted for testing. Default is disabled. Introduced in GitLab 14.10. |

DAST_API_HAR |

HTTP Archive (HAR) file. |

DAST_API_GRAPHQL |

Path to GraphQL endpoint, for example /api/graphql. Introduced in GitLab 15.4. |

DAST_API_GRAPHQL_SCHEMA |

A URL or filename for a GraphQL schema in JSON format. Introduced in GitLab 15.4. |

DAST_API_POSTMAN_COLLECTION |

Postman Collection file. |

DAST_API_POSTMAN_COLLECTION_VARIABLES |

Path to a JSON file to extract Postman variable values. The support for comma-separated (,) files was introduced in GitLab 15.1. |

DAST_API_OVERRIDES_FILE |

Path to a JSON file containing overrides. |

DAST_API_OVERRIDES_ENV |

JSON string containing headers to override. |

DAST_API_OVERRIDES_CMD |

Overrides command. |

DAST_API_OVERRIDES_CMD_VERBOSE |

When set to any value. It shows overrides command output as part of the job output. Introduced in GitLab 14.6. |

DAST_API_PRE_SCRIPT |

Run user command or script before scan session starts. |

DAST_API_POST_SCRIPT |

Run user command or script after scan session has finished. |

DAST_API_OVERRIDES_INTERVAL |

How often to run overrides command in seconds. Defaults to 0 (once). |

DAST_API_HTTP_USERNAME |

Username for HTTP authentication. |

DAST_API_HTTP_PASSWORD |

Password for HTTP authentication. Consider using DAST_API_HTTP_PASSWORD_BASE64 instead. |

DAST_API_HTTP_PASSWORD_BASE64 |

Password for HTTP authentication, base64-encoded. Introduced in GitLab 15.4. |

DAST_API_SERVICE_START_TIMEOUT |

How long to wait for target API to become available in seconds. Default is 300 seconds. |

DAST_API_TIMEOUT |

How long to wait for API responses in seconds. Default is 30 seconds. |

Overrides

DAST API provides a method to add or override specific items in your request, for example:

- Headers

- Cookies

- Query string

- Form data

- JSON nodes

- XML nodes

You can use this to inject semantic version headers, authentication, and so on. The authentication section includes examples of using overrides for that purpose.

Overrides use a JSON document, where each type of override is represented by a JSON object:

{

"headers": {

"header1": "value",

"header2": "value"

},

"cookies": {

"cookie1": "value",

"cookie2": "value"

},

"query": {

"query-string1": "value",

"query-string2": "value"

},

"body-form": {

"form-param1": "value",

"form-param2": "value"

},

"body-json": {

"json-path1": "value",

"json-path2": "value"

},

"body-xml" : {

"xpath1": "value",

"xpath2": "value"

}

}Example of setting a single header:

{

"headers": {

"Authorization": "Bearer dXNlcm5hbWU6cGFzc3dvcmQ="

}

}Example of setting both a header and cookie:

{

"headers": {

"Authorization": "Bearer dXNlcm5hbWU6cGFzc3dvcmQ="

},

"cookies": {

"flags": "677"

}

}Example usage for setting a body-form override:

{

"body-form": {

"username": "john.doe"

}

}The override engine uses body-form when the request body has only form-data content.

Example usage for setting a body-json override:

{

"body-json": {

"$.credentials.access-token": "iddqd!42.$"

}

}Each JSON property name in the object body-json is set to a JSON Path

expression. The JSON Path expression $.credentials.access-token identifies the node to be

overridden with the value iddqd!42.$. The override engine uses body-json when the request body

has only JSON content.

For example, if the body is set to the following JSON:

{

"credentials" : {

"username" :"john.doe",

"access-token" : "non-valid-password"

}

}It is changed to:

{

"credentials" : {

"username" :"john.doe",

"access-token" : "iddqd!42.$"

}

}Here's an example for setting a body-xml override. The first entry overrides an XML attribute and

the second entry overrides an XML element:

{

"body-xml" : {

"/credentials/@isEnabled": "true",

"/credentials/access-token/text()" : "iddqd!42.$"

}

}Each JSON property name in the object body-xml is set to an

XPath v2

expression. The XPath expression /credentials/@isEnabled identifies the attribute node to override

with the value true. The XPath expression /credentials/access-token/text() identifies the

element node to override with the value iddqd!42.$. The override engine uses body-xml when the

request body has only XML

content.

For example, if the body is set to the following XML:

<credentials isEnabled="false">

<username>john.doe</username>

<access-token>non-valid-password</access-token>

</credentials>It is changed to:

<credentials isEnabled="true">

<username>john.doe</username>

<access-token>iddqd!42.$</access-token>

</credentials>You can provide this JSON document as a file or environment variable. You may also provide a command to generate the JSON document. The command can run at intervals to support values that expire.

Using a file

To provide the overrides JSON as a file, the DAST_API_OVERRIDES_FILE CI/CD variable is set. The path is relative to the job current working directory.

Here's an example .gitlab-ci.yml:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_OVERRIDES_FILE: dast-api-overrides.jsonUsing a CI/CD variable

To provide the overrides JSON as a CI/CD variable, use the DAST_API_OVERRIDES_ENV variable.

This allows you to place the JSON as variables that can be masked and protected.

In this example .gitlab-ci.yml, the DAST_API_OVERRIDES_ENV variable is set directly to the JSON:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_OVERRIDES_ENV: '{"headers":{"X-API-Version":"2"}}'In this example .gitlab-ci.yml, the SECRET_OVERRIDES variable provides the JSON. This is a

group or instance level CI/CD variable defined in the UI:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_OVERRIDES_ENV: $SECRET_OVERRIDESUsing a command

If the value must be generated or regenerated on expiration, you can provide a program or script for the DAST API scanner to execute on a specified interval. The provided command runs in an Alpine Linux container that has Python 3 and Bash installed.

You have to set the environment variable DAST_API_OVERRIDES_CMD to the program or script you would like

to execute. The provided command creates the overrides JSON file as defined previously.

You might want to install other scripting runtimes like NodeJS or Ruby, or maybe you need to install a dependency for

your overrides command. In this case, we recommend setting the DAST_API_PRE_SCRIPT to the file path of a script which

provides those prerequisites. The script provided by DAST_API_PRE_SCRIPT is executed once, before the analyzer starts.

See the Alpine Linux package management page for information about installing Alpine Linux packages.

You must provide three CI/CD variables, each set for correct operation:

-

DAST_API_OVERRIDES_FILE: File generated by the provided command. -

DAST_API_OVERRIDES_CMD: Overrides command in charge of generating the overrides JSON file periodically. -

DAST_API_OVERRIDES_INTERVAL: Interval in seconds to run command.

Optionally:

-

DAST_API_PRE_SCRIPT: Script to install runtimes or dependencies before the scan starts.

WARNING:

To execute scripts in Alpine Linux you must first use the command chmod to set the execution permission. For example, to set the execution permission of script.py for everyone, use the command: chmod a+x script.py. If needed, you can version your script.py with the execution permission already set.

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_OVERRIDES_FILE: dast-api-overrides.json

DAST_API_OVERRIDES_CMD: renew_token.py

DAST_API_OVERRIDES_INTERVAL: 300Debugging overrides

Introduced in GitLab 14.8.

By default the output of the overrides command is hidden. If the overrides command returns a non zero exit code, the command is displayed as part of your job output. Optionally, you can set the variable DAST_API_OVERRIDES_CMD_VERBOSE to any value to display overrides command output as it is generated. This is useful when testing your overrides script, but should be disabled afterwards as it slows down testing.

It is also possible to write messages from your script to a log file that is collected when the job completes or fails. The log file must be created in a specific location and following a naming convention.

Adding some basic logging to your overrides script is useful in case the script fails unexpectedly during standard running of the job. The log file is automatically included as an artifact of the job, allowing you to download it after the job has finished.

Following our example, we provided renew_token.py in the environment variable DAST_API_OVERRIDES_CMD. Notice two things in the script:

- Log file is saved in the location indicated by the environmental variable

CI_PROJECT_DIR. - Log filename should match

gl-*.log.

#!/usr/bin/env python

# Example of an overrides command

# Override commands can update the overrides json file

# with new values to be used. This is a great way to

# update an authentication token that will expire

# during testing.

import logging

import json

import os

import requests

import backoff

# [1] Store log file in directory indicated by env var CI_PROJECT_DIR

working_directory = os.environ.get( 'CI_PROJECT_DIR')

overrides_file_name = os.environ.get('DAST_API_OVERRIDES_FILE', 'dast-api-overrides.json')

overrides_file_path = os.path.join(working_directory, overrides_file_name)

# [2] File name should match the pattern: gl-*.log

log_file_path = os.path.join(working_directory, 'gl-user-overrides.log')

# Set up logger

logging.basicConfig(filename=log_file_path, level=logging.DEBUG)

# Use `backoff` decorator to retry in case of transient errors.

@backoff.on_exception(backoff.expo,

(requests.exceptions.Timeout,

requests.exceptions.ConnectionError),

max_time=30)

def get_auth_response():

authorization_url = 'https://authorization.service/api/get_api_token'

return requests.get(

f'{authorization_url}',

auth=(os.environ.get('AUTH_USER'), os.environ.get('AUTH_PWD'))

)

# In our example, access token is retrieved from a given endpoint

try:

# Performs a http request, response sample:

# { "Token" : "b5638ae7-6e77-4585-b035-7d9de2e3f6b3" }

response = get_auth_response()

# Check that the request is successful. may raise `requests.exceptions.HTTPError`

response.raise_for_status()

# Gets JSON data

response_body = response.json()

# If needed specific exceptions can be caught

# requests.ConnectionError : A network connection error problem occurred

# requests.HTTPError : HTTP request returned an unsuccessful status code. [Response.raise_for_status()]

# requests.ConnectTimeout : The request timed out while trying to connect to the remote server

# requests.ReadTimeout : The server did not send any data in the allotted amount of time.

# requests.TooManyRedirects : The request exceeds the configured number of maximum redirections

# requests.exceptions.RequestException : All exceptions that related to Requests

except json.JSONDecodeError as json_decode_error:

# logs errors related decoding JSON response

logging.error(f'Error, failed while decoding JSON response. Error message: {json_decode_error}')

raise

except requests.exceptions.RequestException as requests_error:

# logs exceptions related to `Requests`

logging.error(f'Error, failed while performing HTTP request. Error message: {requests_error}')

raise

except Exception as e:

# logs any other error

logging.error(f'Error, unknown error while retrieving access token. Error message: {e}')

raise

# computes object that holds overrides file content.

# It uses data fetched from request

overrides_data = {

"headers": {

"Authorization": f"Token {response_body['Token']}"

}

}

# log entry informing about the file override computation

logging.info("Creating overrides file: %s" % overrides_file_path)

# attempts to overwrite the file

try:

if os.path.exists(overrides_file_path):

os.unlink(overrides_file_path)

# overwrites the file with our updated dictionary

with open(overrides_file_path, "wb+") as fd:

fd.write(json.dumps(overrides_data).encode('utf-8'))

except Exception as e:

# logs any other error

logging.error(f'Error, unknown error when overwriting file {overrides_file_path}. Error message: {e}')

raise

# logs informing override has finished successfully

logging.info("Override file has been updated")

# endIn the overrides command example, the Python script depends on the backoff library. To make sure the library is installed before executing the Python script, the DAST_API_PRE_SCRIPT is set to a script that installs the dependencies of your overrides command.

As for example, the following script user-pre-scan-set-up.sh

#!/bin/bash

# user-pre-scan-set-up.sh

# Ensures python dependencies are installed

echo "**** install python dependencies ****"

python3 -m ensurepip

pip3 install --no-cache --upgrade \

pip \

backoff

echo "**** python dependencies installed ****"

# endYou have to update your configuration to set the DAST_API_PRE_SCRIPT to our new user-pre-scan-set-up.sh script. For example:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_PRE_SCRIPT: user-pre-scan-set-up.sh

DAST_API_OVERRIDES_FILE: dast-api-overrides.json

DAST_API_OVERRIDES_CMD: renew_token.py

DAST_API_OVERRIDES_INTERVAL: 300In the previous sample, you could use the script user-pre-scan-set-up.sh to also install new runtimes or applications that later on you could use in our overrides command.

Request Headers

The request headers feature lets you specify fixed values for the headers during the scan session. For example, you can use the configuration variable DAST_API_REQUEST_HEADERS to set a fixed value in the Cache-Control header. If the headers you need to set include sensitive values like the Authorization header, use the masked variable feature along with the variable DAST_API_REQUEST_HEADERS_BASE64.

If the Authorization header or any other header needs to get updated while the scan is in progress, consider using the overrides feature.

The variable DAST_API_REQUEST_HEADERS lets you specify a comma-separated (,) list of headers. These headers are included on each request that the scanner performs. Each header entry in the list consists of a name followed by a colon (:) and then by its value. Whitespace before the key or value is ignored. For example, to declare a header name Cache-Control with the value max-age=604800, the header entry is Cache-Control: max-age=604800. To use two headers, Cache-Control: max-age=604800 and Age: 100, set DAST_API_REQUEST_HEADERS variable to Cache-Control: max-age=604800, Age: 100.

The order in which the different headers are provided into the variable DAST_API_REQUEST_HEADERS does not affect the result. Setting DAST_API_REQUEST_HEADERS to Cache-Control: max-age=604800, Age: 100 produces the same result as setting it to Age: 100, Cache-Control: max-age=604800.

Base64

The DAST_API_REQUEST_HEADERS_BASE64 variable accepts the same list of headers as DAST_API_REQUEST_HEADERS, with the only difference that the entire value of the variable must be Base64-encoded. For example, to set DAST_API_REQUEST_HEADERS_BASE64 variable to Authorization: QmVhcmVyIFRPS0VO, Cache-control: bm8tY2FjaGU=, ensure you convert the list to its Base64 equivalent: QXV0aG9yaXphdGlvbjogUW1WaGNtVnlJRlJQUzBWTywgQ2FjaGUtY29udHJvbDogYm04dFkyRmphR1U9, and the Base64-encoded value must be used. This is useful when storing secret header values in a masked variable, which has character set restrictions.

WARNING: Base64 is used to support the masked variable feature. Base64 encoding is not by itself a security measure, because sensitive values can be easily decoded.

Example: Adding a list of headers on each request using plain text

In the following example of a .gitlab-ci.yml, DAST_API_REQUEST_HEADERS configuration variable is set to provide two header values as explained in request headers.

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_REQUEST_HEADERS: 'Cache-control: no-cache, Save-Data: on'Example: Using a masked CI/CD variable

The following .gitlab-ci.yml sample assumes the masked variable SECRET_REQUEST_HEADERS_BASE64 is defined as a group or instance level CI/CD variable defined in the UI. The value of SECRET_REQUEST_HEADERS_BASE64 is set to WC1BQ01FLVNlY3JldDogc31jcnt0ISwgWC1BQ01FLVRva2VuOiA3MDVkMTZmNWUzZmI=, which is the Base64-encoded text version of X-ACME-Secret: s3cr3t!, X-ACME-Token: 705d16f5e3fb. Then, it can be used as follows:

stages:

- dast

include:

- template: DAST-API.gitlab-ci.yml

variables:

DAST_API_PROFILE: Quick

DAST_API_OPENAPI: test-api-specification.json

DAST_API_TARGET_URL: http://test-deployment/

DAST_API_REQUEST_HEADERS_BASE64: $SECRET_REQUEST_HEADERS_BASE64Consider using DAST_API_REQUEST_HEADERS_BASE64 when storing secret header values in a masked variable, which has character set restrictions.

Exclude Paths

Introduced in GitLab 14.0.

When testing an API it can be useful to exclude certain paths. For example, you might exclude testing of an authentication service or an older version of the API. To exclude paths, use the DAST_API_EXCLUDE_PATHS CI/CD variable . This variable is specified in your .gitlab-ci.yml file. To exclude multiple paths, separate entries using the ; character. In the provided paths you can use a single character wildcard ? and * for a multiple character wildcard.

To verify the paths are excluded, review the Tested Operations and Excluded Operations portion of the job output. You should not see any excluded paths listed under Tested Operations.

2021-05-27 21:51:08 [INF] DAST API: --[ Tested Operations ]-------------------------

2021-05-27 21:51:08 [INF] DAST API: 201 POST http://target:7777/api/users CREATED

2021-05-27 21:51:08 [INF] DAST API: ------------------------------------------------

2021-05-27 21:51:08 [INF] DAST API: --[ Excluded Operations ]-----------------------

2021-05-27 21:51:08 [INF] DAST API: GET http://target:7777/api/messages

2021-05-27 21:51:08 [INF] DAST API: POST http://target:7777/api/messages

2021-05-27 21:51:08 [INF] DAST API: ------------------------------------------------Examples

This example excludes the /auth resource. This does not exclude child resources (/auth/child).

variables:

DAST_API_EXCLUDE_PATHS: /authTo exclude /auth, and child resources (/auth/child), we use a wildcard.

variables:

DAST_API_EXCLUDE_PATHS: /auth*To exclude multiple paths we use the ; character. In this example we exclude /auth* and /v1/*.

variables:

DAST_API_EXCLUDE_PATHS: /auth*;/v1/*To exclude one or more nested levels within a path we use **. In this example we are testing API endpoints. We are testing /api/v1/ and /api/v2/ of a data query requesting mass, brightness and coordinates data for planet, moon, star, and satellite objects. Example paths that could be scanned include, but are not limited to:

/api/v2/planet/coordinates/api/v1/star/mass/api/v2/satellite/brightness

In this example we test the brightness endpoint only:

variables:

DAST_API_EXCLUDE_PATHS: /api/**/mass;/api/**/coordinatesExclude parameters

Introduced in GitLab 14.10.

While testing an API you may might want to exclude a parameter (query string, header, or body element) from testing. This may be needed because a parameter always causes a failure, slows down testing, or for other reasons. To exclude parameters, you can set one of the following variables: DAST_API_EXCLUDE_PARAMETER_ENV or DAST_API_EXCLUDE_PARAMETER_FILE.

The DAST_API_EXCLUDE_PARAMETER_ENV allows providing a JSON string containing excluded parameters. This is a good option if the JSON is short and does not change often. Another option is the variable DAST_API_EXCLUDE_PARAMETER_FILE. This variable is set to a file path that can be checked into the repository, created by another job as an artifact, or generated at runtime with a pre-script using DAST_API_PRE_SCRIPT.

Exclude parameters using a JSON document

The JSON document contains a JSON object, this object uses specific properties to identify which parameter should be excluded. You can provide the following properties to exclude specific parameters during the scanning process:

-

headers: Use this property to exclude specific headers. The property's value is an array of header names to be excluded. Names are case-insensitive. -

cookies: Use this property's value to exclude specific cookies. The property's value is an array of cookie names to be excluded. Names are case-sensitive. -

query: Use this property to exclude specific fields from the query string. The property's value is an array of field names from the query string to be excluded. Names are case-sensitive. -

body-form: Use this property to exclude specific fields from a request that uses the media typeapplication/x-www-form-urlencoded. The property's value is an array of the field names from the body to be excluded. Names are case-sensitive. -

body-json: Use this property to exclude specific JSON nodes from a request that uses the media typeapplication/json. The property's value is an array, each entry of the array is a JSON Path expression. -

body-xml: Use this property to exclude specific XML nodes from a request that uses media typeapplication/xml. The property's value is an array, each entry of the array is a XPath v2 expression.

Thus, the following JSON document is an example of the expected structure to exclude parameters.

{

"headers": [

"header1",

"header2"

],

"cookies": [

"cookie1",

"cookie2"

],

"query": [

"query-string1",

"query-string2"

],

"body-form": [

"form-param1",

"form-param2"

],

"body-json": [

"json-path-expression-1",

"json-path-expression-2"

],

"body-xml" : [

"xpath-expression-1",

"xpath-expression-2"

]

}Examples

Excluding a single header

To exclude the header Upgrade-Insecure-Requests, set the header property's value to an array with the header name: [ "Upgrade-Insecure-Requests" ]. For instance, the JSON document looks like this:

{

"headers": [ "Upgrade-Insecure-Requests" ]

}Header names are case-insensitive, so the header name UPGRADE-INSECURE-REQUESTS is equivalent to Upgrade-Insecure-Requests.

Excluding both a header and two cookies

To exclude the header Authorization, and the cookies PHPSESSID and csrftoken, set the headers property's value to an array with header name [ "Authorization" ] and the cookies property's value to an array with the cookies' names [ "PHPSESSID", "csrftoken" ]. For instance, the JSON document looks like this:

{

"headers": [ "Authorization" ],

"cookies": [ "PHPSESSID", "csrftoken" ]

}

Excluding a body-form parameter

To exclude the password field in a request that uses application/x-www-form-urlencoded, set the body-form property's value to an array with the field name [ "password" ]. For instance, the JSON document looks like this:

{

"body-form": [ "password" ]

}The exclude parameters uses body-form when the request uses a content type application/x-www-form-urlencoded.

Excluding a specific JSON nodes using JSON Path

To exclude the schema property in the root object, set the body-json property's value to an array with the JSON Path expression [ "$.schema" ].

The JSON Path expression uses special syntax to identify JSON nodes: $ refers to the root of the JSON document, . refers to the current object (in our case the root object), and the text schema refers to a property name. Thus, the JSON path expression $.schema refers to a property schema in the root object.

For instance, the JSON document looks like this:

{

"body-json": [ "$.schema" ]

}The exclude parameters uses body-json when the request uses a content type application/json. Each entry in body-json is expected to be a JSON Path expression. In JSON Path characters like $, *, . among others have special meaning.

Excluding multiple JSON nodes using JSON Path

To exclude the property password on each entry of an array of users at the root level, set the body-json property's value to an array with the JSON Path expression [ "$.users[*].paswword" ].

The JSON Path expression starts with $ to refer to the root node and uses . to refer to the current node. Then, it uses users to refer to a property and the characters [ and ] to enclose the index in the array you want to use, instead of providing a number as an index you use * to specify any index. After the index reference, we find . which now refers to any given selected index in the array, preceded by a property name password.

For instance, the JSON document looks like this:

{

"body-json": [ "$.users[*].paswword" ]